D. D. Allan | Andy Vonasch | Christoph Bartneck

Against the backdrop of rapid advancements in AI, actuator, and sensing technologies, a new era of intelligent robots is on the rise, which in turn, are expected to interact and work alongside humans in everyday life. At the same time, however, research has found that robots capable of outperforming human beings on mental and/or physical tasks provoke perceptions of threat (i.e., threats to jobs and resources, in addition to threats to human identity and distinctiveness).

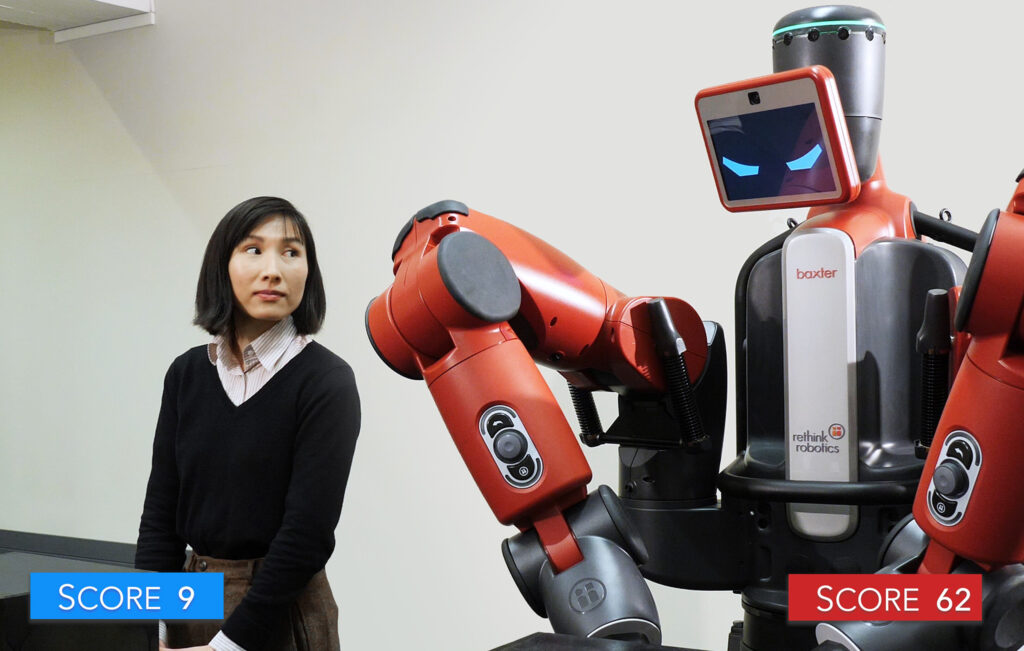

Although the perceived threat response is widely thought to be experienced by all people, in this work, we posited that this may not be the case. Specifically, we proposed that implicit self-theory (core beliefs about the malleability of self-attributes, such as intelligence) is a determinant of whether one person experiences threat perception to a greater degree than another. We tested for this possibility in a novel experiment in which participants watched a video of an apparently autonomous intelligent robot defeating human quiz players in a general knowledge game. Following the video, participants received either social comparison feedback, improvement-oriented feedback, or no feedback, and were then given the opportunity to play against the robot.

We found that those who adopt a malleable self-theory (incremental theorists) were more likely to play against a robot after imagining losing to it, as well as exhibit more favorable responses and less perceived threat than entity theorists (those adopting a fixed self-theory). Moreover, entity theorists (vs. incremental theorists) perceived autonomous intelligent robots to be significantly more threatening.

These findings expand the human-robot interaction (HRI) literature by demonstrating that implicit self-theory is, in fact, an influential variable underpinning perceived threat. Additionally, these findings may hold practical value for designers and marketers. More particularly, our results would suggest that a robot presented as intelligent and capable of outperforming human beings could achieve greater acceptance and use among incremental theorists (vs. entity theorists). Whereas a robot that is presented as being less intelligent and unlikely to outperform human beings could increase the probability of acceptance among entity theorists. Together, these findings may prove useful in understanding how to prepare people for the increasing presence of robots and other forms of embodied AI.

This work is part of interdisciplinary research currently being undertaken to understand the impact of implicit self-theory in the context of Human-Robot Interaction (HRI).

To watch Baxter and the human competitors in action, check out the following video.